Abstract

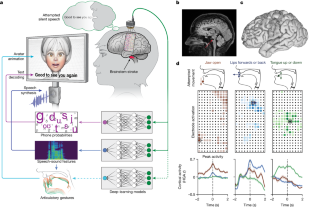

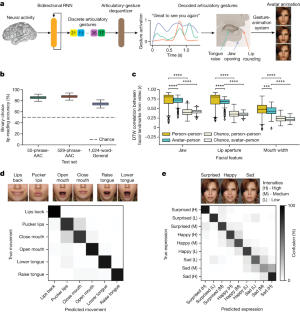

Speech neuroprostheses have the potential to restore communication to people living with paralysis, but naturalistic speed and expressivity are elusive1. Here we use high-density surface recordings of the speech cortex in a clinical-trial participant with severe limb and vocal paralysis to achieve high-performance real-time decoding across three complementary speech-related output modalities: text, speech audio and facial-avatar animation. We trained and evaluated deep-learning models using neural data collected as the participant attempted to silently speak sentences. For text, we demonstrate accurate and rapid large-vocabulary decoding with a median rate of 78 words per minute and median word error rate of 25%. For speech audio, we demonstrate intelligible and rapid speech synthesis and personalization to the participant’s pre-injury voice. For facial-avatar animation, we demonstrate the control of virtual orofacial movements for speech and non-speech communicative gestures. The decoders reached high performance with less than two weeks of training. Our findings introduce a multimodal speech-neuroprosthetic approach that has substantial promise to restore full, embodied communication to people living with severe paralysis.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

199,00 € per year

only 3,90 € per issue

Rent or buy this article

Prices vary by article type

from$1.95

to$39.95

Prices may be subject to local taxes which are calculated during checkout

Data availability

Data relevant to this study are accessible under restricted access according to our clinical trial protocol, which enables us to share de-identified information with researchers from other institutions but prohibits us from making it publicly available. Access can be granted upon reasonable request. Requests for access to the dataset can be made online at https://doi.org/10.5281/zenodo.8200782. Response can be expected within three weeks. Any data provided must be kept confidential and cannot be shared with others unless approval is obtained. To protect the participant’s anonymity, any information that could identify her will not be part of the shared data. Source data to recreate the figures in the manuscript, including error rates, statistical values and cross-validation accuracy will be publicly released upon publication of the manuscript. Source data are provided with this paper.

Code availability

Code to replicate the main findings of this study can be found on GitHub at https://github.com/UCSF-Chang-Lab-BRAVO/multimodal-decoding.

References

-

Moses, D. A. et al. Neuroprosthesis for decoding speech in a paralyzed person with anarthria. N. Engl. J. Med. 385, 217–227 (2021).

-

Peters, B. et al. Brain-computer interface users speak up: The Virtual Users’ Forum at the 2013 International Brain-Computer Interface Meeting. Arch. Phys. Med. Rehabil. 96, S33–S37 (2015).

-

Metzger, S. L. et al. Generalizable spelling using a speech neuroprosthesis in an individual with severe limb and vocal paralysis. Nat. Commun. 13, 6510 (2022).

-

Beukelman, D. R. et al. Augmentative and Alternative Communication (Paul H. Brookes, 1998).

-

Graves, A., Fernández, S., Gomez, F. & Schmidhuber, J. Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. In Proc. 23rd International Conference on Machine learning - ICML ’06 (eds Cohen, W. & Moore, A.) 369–376 (ACM Press, 2006); https://doi.org/10.1145/1143844.1143891.

-

Watanabe, S., Delcroix, M., Metze, F. & Hershey, J. R. New Era for Robust Speech Recognition: Exploiting Deep Learning. (Springer, 2017).

-

Vansteensel, M. J. et al. Fully implanted brain–computer interface in a locked-in patient with ALS. N. Engl. J. Med. 375, 2060–2066 (2016).

-

Pandarinath, C. et al. High performance communication by people with paralysis using an intracortical brain-computer interface. eLife 6, e18554 (2017).

-

Willett, F. R., Avansino, D. T., Hochberg, L. R., Henderson, J. M. & Shenoy, K. V. High-performance brain-to-text communication via handwriting. Nature 593, 249–254 (2021).

-

Angrick, M. et al. Speech synthesis from ECoG using densely connected 3D convolutional neural networks. J. Neural Eng. 16, 036019 (2019).

-

Anumanchipalli, G. K., Chartier, J. & Chang, E. F. Speech synthesis from neural decoding of spoken sentences. Nature 568, 493–498 (2019).

-

Hsu, W.-N. et al. HuBERT: self-supervised speech representation learning by masked prediction of hidden units. IEEE/ACM Trans. Audio Speech Lang. Process. 29, 3451–3460 (2021).

-

Cho, C. J., Wu, P., Mohamed, A. & Anumanchipalli, G. K. Evidence of vocal tract articulation in self-supervised learning of speech. In ICASSP 2023 - 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (IEEE, 2023).

-

Lakhotia, K. et al. On generative spoken language modeling from raw audio. In Trans. Assoc. Comput. Linguist. 9, 1336–1354 (2021).

-

Prenger, R., Valle, R. & Catanzaro, B. Waveglow: a flow-based generative network for speech synthesis. In Proc. ICASSP 2019 - 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (eds Sanei. S. & Hanzo, L.) 3617–3621 (IEEE, 2019); https://doi.org/10.1109/ICASSP.2019.8683143.

-

Yamagishi, J. et al. Thousands of voices for HMM-based speech synthesis–analysis and application of TTS systems built on various ASR corpora. IEEE Trans. Audio Speech Lang. Process. 18, 984–1004 (2010).

-

Wolters, M. K., Isaac, K. B. & Renals, S. Evaluating speech synthesis intelligibility using Amazon Mechanical Turk. In Proc. 7th ISCA Workshop Speech Synth. SSW-7 (eds Sagisaka, Y. & Tokuda, K.) 136–141 (2010).

-

Mehrabian, A. Silent Messages: Implicit Communication of Emotions and Attitudes (Wadsworth, 1981).

-

Jia, J., Wang, X., Wu, Z., Cai, L. & Meng, H. Modeling the correlation between modality semantics and facial expressions. In Proc. 2012 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (eds Lin, W. et al.) 1–10 (2012).

-

Sadikaj, G. & Moskowitz, D. S. I hear but I don’t see you: interacting over phone reduces the accuracy of perceiving affiliation in the other. Comput. Hum. Behav. 89, 140–147 (2018).

-

Sumby, W. H. & Pollack, I. Visual contribution to speech intelligibility in noise. J. Acoust. Soc. Am. 26, 212–215 (1954).

-

Chartier, J., Anumanchipalli, G. K., Johnson, K. & Chang, E. F. Encoding of articulatory kinematic trajectories in human speech sensorimotor cortex. Neuron 98, 1042–1054 (2018).

-

Bouchard, K. E., Mesgarani, N., Johnson, K. & Chang, E. F. Functional organization of human sensorimotor cortex for speech articulation. Nature 495, 327–332 (2013).

-

Carey, D., Krishnan, S., Callaghan, M. F., Sereno, M. I. & Dick, F. Functional and quantitative MRI mapping of somatomotor representations of human supralaryngeal vocal tract. Cereb. Cortex 27, 265–278 (2017).

-

Mugler, E. M. et al. Differential representation of articulatory gestures and phonemes in precentral and inferior frontal gyri. J. Neurosci. 4653, 1206–1218 (2018).

-

Berger, M. A., Hofer, G. & Shimodaira, H. Carnival—combining speech technology and computer animation. IEEE Comput. Graph. Appl. 31, 80–89 (2011).

-

van den Oord, A., Vinyals, O. & Kavukcuoglu, K. Neural discrete representation learning. In Proc. 31st International Conference on Neural Information Processing Systems 6309–6318 (Curran Associates, 2017).

-

King, D. E. Dlib-ml: a machine learning toolkit. J. Mach. Learn. Res. 10, 1755–1758 (2009).

-

Salari, E., Freudenburg, Z. V., Vansteensel, M. J. & Ramsey, N. F. Classification of facial expressions for intended display of emotions using brain–computer interfaces. Ann. Neurol. 88, 631–636 (2020).

-

Eichert, N., Papp, D., Mars, R. B. & Watkins, K. E. Mapping human laryngeal motor cortex during vocalization. Cereb. Cortex 30, 6254–6269 (2020).

-

Breshears, J. D., Molinaro, A. M. & Chang, E. F. A probabilistic map of the human ventral sensorimotor cortex using electrical stimulation. J. Neurosurg. 123, 340–349 (2015).

-

Simonyan, K., Vedaldi, A. & Zisserman, A. Deep inside convolutional networks: visualising image classification models and saliency maps. In Proc. Workshop at International Conference on Learning Representations (eds. Bengio, Y. & LeCun, Y.) (2014).

-

Umeda, T., Isa, T. & Nishimura, Y. The somatosensory cortex receives information about motor output. Sci. Adv. 5, eaaw5388 (2019).

-

Murray, E. A. & Coulter, J. D. Organization of corticospinal neurons in the monkey. J. Comp. Neurol. 195, 339–365 (1981).

-

Arce, F. I., Lee, J.-C., Ross, C. F., Sessle, B. J. & Hatsopoulos, N. G. Directional information from neuronal ensembles in the primate orofacial sensorimotor cortex. J. Neurophysiol.110, 1357–1369 (2013).

-

Eichert, N., Watkins, K. E., Mars, R. B. & Petrides, M. Morphological and functional variability in central and subcentral motor cortex of the human brain. Brain Struct. Funct. 226, 263–279 (2021).

-

Binder, J. R. Current controversies on Wernicke’s area and its role in language. Curr. Neurol. Neurosci. Rep. 17, 58 (2017).

-

Rousseau, M.-C. et al. Quality of life in patients with locked-in syndrome: evolution over a 6-year period. Orphanet J. Rare Dis. 10, 88 (2015).

-

Felgoise, S. H., Zaccheo, V., Duff, J. & Simmons, Z. Verbal communication impacts quality of life in patients with amyotrophic lateral sclerosis. Amyotroph. Lateral Scler. Front. Degener. 17, 179–183 (2016).

-

Huggins, J. E., Wren, P. A. & Gruis, K. L. What would brain-computer interface users want? Opinions and priorities of potential users with amyotrophic lateral sclerosis. Amyotroph. Lateral Scler. 12, 318–324 (2011).

-

Bruurmijn, M. L. C. M., Pereboom, I. P. L., Vansteensel, M. J., Raemaekers, M. A. H. & Ramsey, N. F. Preservation of hand movement representation in the sensorimotor areas of amputees. Brain 140, 3166–3178 (2017).

-

Brumberg, J. S., Pitt, K. M. & Burnison, J. D. A noninvasive brain-computer interface for real-time speech synthesis: the importance of multimodal feedback. IEEE Trans. Neural Syst. Rehabil. Eng. 26, 874–881 (2018).

-

Sadtler, P. T. et al. Neural constraints on learning. Nature 512, 423–426 (2014).

-

Chiang, C.-H. et al. Development of a neural interface for high-definition, long-term recording in rodents and nonhuman primates. Sci. Transl. Med. 12, eaay4682 (2020).

-

Shi, B., Hsu, W.-N., Lakhotia, K. & Mohamed, A. Learning audio-visual speech representation by masked multimodal cluster prediction. In Proc. International Conference on Learning Representations (2022).

-

Crone, N. E., Miglioretti, D. L., Gordon, B. & Lesser, R. P. Functional mapping of human sensorimotor cortex with electrocorticographic spectral analysis. II. Event-related synchronization in the gamma band. Brain 121, 2301–2315 (1998).

-

Moses, D. A., Leonard, M. K. & Chang, E. F. Real-time classification of auditory sentences using evoked cortical activity in humans. J. Neural Eng. 15, 036005 (2018).

-

Bird, S. & Loper, E. NLTK: The Natural Language Toolkit. In Proc. ACL Interactive Poster and Demonstration Sessions (ed. Scott, D.) 214–217 (Association for Computational Linguistics, 2004).

-

Danescu-Niculescu-Mizil, C. & Lee, L. Chameleons in imagined conversations: a new approach to understanding coordination of linguistic style in dialogs. In Proc. 2nd Workshop on Cognitive Modeling and Computational Linguistics (eds. Hovy, D. et al.) 76–87 (Association for Computational Linguistics, 2011).

-

Virtanen, P. et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020).

-

Park, K. & Kim, J. g2pE. (2019); https://github.com/Kyubyong/g2p.

-

Graves, A., Mohamed, A. & Hinton, G. Speech recognition with deep recurrent neural networks. In Proc. International Conference on Acoustics, Speech, and Signal Processing (eds Ward, R. & Deng, L.) 6645–6649 (2013); https://doi.org/10.1109/ICASSP.2013.6638947.

-

Hannun, A. et al. Deep Speech: scaling up end-to-end speech recognition. Preprint at https://arXiv.org/abs/1412.5567 (2014).

-

Paszke, A. et al. Pytorch: an imperative style, high-performance deep learning library. In Proc. Advances in Neural Information Processing Systems 32 (2019).

-

Collobert, R., Puhrsch, C. & Synnaeve, G. Wav2Letter: an end-to-end ConvNet-based speech recognition system. Preprint at https://doi.org/10.48550/arXiv.1609.03193 (2016).

-

Yang, Y.-Y. et al. Torchaudio: building blocks for audio and speech processing. In Proc. ICASSP 2022 - 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (ed. Li, H.) 6982–6986 (2022); https://doi.org/10.1109/ICASSP43922.2022.9747236.

-

Jurafsky, D. & Martin, J. H. Speech and Language Processing: an Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition (Pearson Education, 2009).

-

Kneser, R. & Ney, H. Improved backing-off for M-gram language modeling. In Proc. 1995 International Conference on Acoustics, Speech, and Signal Processing Vol. 1 (eds Sanei. S. & Hanzo, L.) 181–184 (IEEE, 1995).

-

Heafield, K. KenLM: Faster and smaller language model queries. In Proc. Sixth Workshop on Statistical Machine Translation, 187–197 (Association for Computational Linguistics, 2011).

-

Panayotov, V., Chen, G., Povey, D. & Khudanpur, S. Librispeech: an ASR corpus based on public domain audio books. In Proc. 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 5206–5210 (2015); https://doi.org/10.1109/ICASSP.2015.7178964.

-

Ito, K. & Johnson, L. The LJ speech dataset (2017); https://keithito.com/LJ-Speech-Dataset/.

-

van den Oord, A. et al. WaveNet: a generative model for raw audio. Preprint at https://arXiv.org/abs/1609.03499 (2016).

-

Ott, M. et al. fairseq: a fast, extensible toolkit for sequence modeling. In Proc. 2019 Conference of the North American Chapter of the Association for Computational Linguistics (Demonstrations) (eds. Muresan, S., Nakov, P. & Villavicencio, A.) 48–53 (Association for Computational Linguistics, 2019).

-

Park, D. S. et al. SpecAugment: a simple data augmentation method for automatic speech recognition. In Proc. Interspeech 2019 (eds Kubin, G. & Kačič, Z.) 2613–2617 (2019); https://doi.org/10.21437/Interspeech.2019-2680.

-

Lee, A. et al. Direct speech-to-speech translation with discrete units. In Proc. 60th Annual Meeting of the Association for Computational Linguistics Vol. 1, 3327–3339 (Association for Computational Linguistics, 2022).

-

Casanova, E. et al. YourTTS: towards zero-shot multi-speaker TTS and zero-shot voice conversion for everyone. In Proc. of the 39th International Conference on Machine Learning Vol. 162 (eds. Chaudhuri, K. et al.) 2709–2720 (PMLR, 2022).

-

Wu, P., Watanabe, S., Goldstein, L., Black, A. W. & Anumanchipalli, G. K. Deep speech synthesis from articulatory representations. In Proc. Interspeech 2022 779–783 (2022).

-

Kubichek, R. Mel-cepstral distance measure for objective speech quality assessment. In Proc. IEEE Pacific Rim Conference on Communications Computers and Signal Processing Vol. 1, 125–128 (IEEE, 1993).

-

The most powerful real-time 3D creation tool — Unreal Engine (Epic Games, 2020).

-

Ekman, P. & Friesen, W. V. Facial action coding system. APA PsycNet https://doi.org/10.1037/t27734-000 (2019).

-

Gramfort, A. et al. MEG and EEG data analysis with MNE-Python. Front. Neurosci. https://doi.org/10.3389/fnins.2013.00267 (2013).

-

Müllner, D. Modern hierarchical, agglomerative clustering algorithms. Preprint at https://arXiv.org/abs/1109.2378 (2011).

-

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

-

Waskom, M. seaborn: statistical data visualization. J. Open Source Softw. 6, 3021 (2021).

-

Seabold, S. & Perktold, J. Statsmodels: econometric and statistical modeling with Python. In Proc. 9th Python in Science Conference (eds. van der Walt, S. & Millman, J.) 92–96 (2010); https://doi.org/10.25080/Majora-92bf1922-011.

-

Cheung, C., Hamilton, L. S., Johnson, K. & Chang, E. F. The auditory representation of speech sounds in human motor cortex. eLife 5, e12577 (2016).

-

Hamilton, L. S., Chang, D. L., Lee, M. B. & Chang, E. F. Semi-automated anatomical labeling and inter-subject warping of high-density intracranial recording electrodes in electrocorticography. Front. Neuroinform. 11, 62 (2017).

Acknowledgements

We thank our participant, Bravo-3, for her incredible dedication and commitment. We thank T. Dubnicoff for video editing, K. Probst for illustrations, members of the laboratory of E.F.C. for feedback, V. Her for administrative support, and the participant’s family and caregivers for logistic support. We thank C. Kurtz-Miott, V. Anderson and S. Brosler for help with data collection with our participant. We thank T. Li for assistance with generating the participant’s personalized voice. We thank P. Liu for conducting a comprehensive speech–language pathology assessment, B. Speidel for help with imaging reconstruction of the patient’s pial surface and electrode array, the volunteers for the healthy-speaker video recordings, and I. Garner for manuscript review. We also thank the Speech Graphics team, specifically D. Palaz and G. Clarke, for adapting and supporting the technology used in this work and for providing articulatory data. Last, we thank L. Sugrue for preoperative functional magnetic resonance imaging planning. For this work, the National Institutes of Health (grant NINDS 5U01DC018671), Joan and Sandy Weill Foundation, Susan and Bill Oberndorf, Ron Conway, David Krane, Graham and Christina Spencer, and William K. Bowes, Jr. Foundation supported S.L.M., K.T.L., D.A.M., M.P.S., R.W., M.E.D., J.R.L., G.K.A. and E.F.C. K.T.L., P.W. and G.K.A. are also supported by the Rose Hills Foundation and the Noyce Foundation. A.B.S. is supported by the National Institute of General Medical Sciences Medical Scientist Training Program, grant no. T32GM007618. K.T.L. is supported by the National Science Foundation GRFP. A.T.-C. and K.G. did not have relevant funding for this work.

Author information

Authors and Affiliations

Contributions

S.L.M. designed and, along with A.B.S., trained and optimized the text decoder, NATO-and-hand-motor classifier and language model. K.T.L. and R.W. designed, trained and optimized the speech-synthesis models with input from G.K.A. K.T.L., M.A.B., D.A.M. and M.E.D. developed software and a user interface to support real-time and offline avatar animation and, along with E.F.C., G.K.A. and S.L.M., designed the avatar-decoding methodology. S.L.M. and K.T.L. implemented the direct-decoding approach to decode articulatory gestures and, along with A.B.S., developed models to classify non-verbal orofacial movements and emotional expressions. D.A.M. managed and coordinated the research project and implemented real-time software infrastructure to collect data and run the tasks. D.A.M., S.L.M. and K.T.L. implemented real-time software to enable real-time text, speech and avatar decoding and designed the tasks and sentence sets. K.T.L. and I.Z. designed and conducted perceptual-accuracy evaluations with decoded speech and avatar animations. K.T.L., P.W. and G.K.A. developed the personalized voice synthesizer. K.T.L., S.L.M. and I.Z. analysed human-speaker data for avatar–human comparisons. A.B.S. and S.L.M. designed and carried out the phone-encoding analyses. A.B.S. designed and carried out the articulatory-encoding analyses. A.B.S., S.L.M. and K.T.L. carried out offline exclusion analyses to assess the importance of electrode density, feature sets and anatomical regions. J.R.L. designed and trained the speech detection model used in freeform text decoding. S.L.M., K.T.L. and A.B.S. carried out statistical analyses and, along with D.A.M., generated figures. D.A.M., K.T.L. and M.E.D. designed graphical user interfaces for text, speech and avatar decoding. S.L.M., K.T.L., A.B.S., D.A.M., M.P.S. and E.F.C. prepared the manuscript with input from other authors. M.P.S., M.E.D. and D.A.M. led the data-collection efforts with help from S.L.M., K.T.L., A.B.S., R.W. and J.R.L. M.P.S. recruited the participant, handled logistical coordination with the participant throughout the study and, together with A.T.-C., maintained and updated the clinical-trial protocol. M.P.S., A.T.-C., K.G. and E.F.C. carried out regulatory and clinical supervision. E.F.C. conceived, designed and supervised the study.

Corresponding author

Ethics declarations

Competing interests

S.L.M., D.A.M., J.R.L. and E.F.C. are inventors on a pending provisional UCSF patent application that is relevant to the neural-decoding approaches used in this work. G.K.A. and E.F.C. are inventors on patent application PCT/US2020/028926, D.A.M. and E.F.C. are inventors on patent application PCT/US2020/043706, and E.F.C. is an inventor on patent US9905239B2, which are broadly relevant to the neural-decoding approaches in this work. M.A.B. is chief technical officer at Speech Graphics. All other authors declare no competing interests.

Peer review

Peer review information

Nature thanks Taylor Abel, Nicholas Hatsopoulos, Parag Patil, Betts Peters and Nick Ramsey for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

Extended Data Fig. 1 Relationship between PER and WER.

Relationship between phone error rate and word error rate across n = 549 points. Each point represents the phone and word error rate for all sentences used during model evaluation for all evaluation sets. The points display a linear trend, with the linear equation corresponding with an R2 of .925. Shading denotes 99% confidence interval which was calculated using bootstrapping over 2000 iterations.

Extended Data Fig. 2 Character and phone error rates for simulated text decoding with larger vocabularies.

a,b, We computed character (a) and phone (b) error rates on sentences obtained by simulating text decoding with the 1024-word-General sentence set using log-spaced vocabularies of 1,506, 2,269, 3,419, 5,152, 7,763, 11,696, 17,621, 26,549, and 39,378 words, and we compared performance to the real-time results using our 1,024 word vocabulary. Each point represents the median character or phone error rate across n = 25 real-time evaluation pseudo-blocks, and error bars represent 99% confidence intervals of the median. With our largest 39,378 word vocabulary, we found a median character error rate of 21.7% (99% CI [16.3%, 28.1%]), and median phone error rate of 20.6% (99% CI [15.9%, 26.1%]). We compared the WER, CER, and PER of the simulation with the largest vocabulary size to the real-time results, and found that there was no significant increase in any error rate (P > .01 for all comparisons. Test statistic = 48.5, 93.0, 88.0, respectively, p = .342, .342, .239, respectively, Wilcoxon signed-rank test with 3-way Holm-Bonferroni correction).

Extended Data Fig. 3 Simulated text decoding results on the 50-phrase-AAC sentence set.

a–c, We computed phone (a), word (b), and character (c) error rates on simulated text-decoding results with the real-time 50-phrase-AAC blocks used for evaluation of the synthesis models. Across n = 15 pseudo-blocks, we observed a median PER of 5.63% (99% CI [2.10, 12.0]), median WER of 4.92% (99% CI [3.18, 14.0]) and median CER of 5.91% (99% CI [2.21, 11.4]). The PER, WER, and CER were also significantly better than chance (P < .001 for all metrics, Wilcoxon signed-rank test with 3-way Holm-Bonferonni Correction for multiple comparisons). Statistics compare n = 15 total pseudo-blocks. For PER: stat = 0, P = 1.83e-4. For CER: stat = 0, P = 1.83e-4. For WER: stat = 0, P = 1.83e-4. d, Speech was decoded at high rates with a median WPM of 101 (99% CI [95.6, 103]).

Extended Data Fig. 4 Simulated text decoding results on the 529-phrase-AAC sentence set.

a–c, We computed phone (a), word (b), and character (c) error rates on simulated text-decoding results with the real-time 529-phrase-AAC blocks used for evaluation of the synthesis models. Across n = 15 pseudo-blocks, we observed a median PER of 17.3 (99% CI [12.6, 20.1]), median WER of 17.1% (99% CI [8.89, 28.9]) and median CER of 15.2% (99% CI [10.1, 22.7]). The PER, WER, and CER were also significantly better than chance (p < .001 for all metrics, two-sided Wilcoxon signed-rank test with 3-way Holm-Bonferonni Correction for multiple comparisons). Statistics compare n = 15 total pseudo-blocks. For PER: stat = 0, p = 1.83e-4. For CER: stat = 0, p = 1.83e-4. For WER: stat = 0, P = 1.83e-4. d, Speech was decoded at high rates with a median WPM of 89.9 (99% CI [83.6, 93.3]).

Extended Data Fig. 5 Mel-cepstral distortions (MCDs) using a personalized voice tailored to the participant.

We calculate the Mel-cepstral distortion (MCDs) between decoded speech with the participant’s personalized voice and voice-converted reference waveforms for the 50-phrase-AAC, 529-phrase-AAC, and 1024-word-General set. Lower MCD indicates better performance. We achieved mean MCDs of 3.87 (99% CI [3.83, 4.45]), 5.12 (99% CI [4.41, 5.35]), and 5.57 (99% CI [5.17, 5.90]) dB for the 50-phrase-AAC (N = 15 pseudo-blocks), 529-phrase-AAC (N = 15 pseudoblocks), and 1024-word-General sets (N = 20 pseudo-blocks) Chance MCDs were computed by shuffling electrode indices in the test data with the same synthesis pipeline and computed on the 50-phrase-AAC evaluation set. The MCDs of all sets are significantly lower than the chance. 529-phrase-AAC vs. 1024-word-General ∗∗∗ = P < 0.001, otherwise all ∗∗∗∗ = P < 0.0001. Two-sided Wilcoxon rank-sum tests were used for comparisons within-dataset and Mann-Whitney U-test outside of dataset with 9-way Holm-Bonferroni correct.

Extended Data Fig. 6 Comparison of perceptual word error rate and mel-cepstral distortion.

Scatter plot illustrating relationship between perceptual word error rate (WER) and mel-cepstral distortion (MCD) for the 50-phraseAAC sentence set, the 529-phrase-AAC sentence set, the 1024-word-General sentence set. Each data point represents the mean accuracy from a single pseudo-block. A dashed black line indicates the best linear fit to the pseudo-blocks, providing a visual representation of the overall trend. Consistent with expectation, this plot suggests a positive correlation between WER and MCD for our speech synthesizer.

Extended Data Fig. 7 Electrode contributions to decoding performance.

a, MRI reconstruction of the participant’s brain overlaid with the locations of implanted electrodes. Cortical regions and electrodes are colored according to anatomical region (PoCG: postcentral gyrus, PrCG: precentral gyrus, SMC: sensorimotor cortex). b–d, Electrode contributions to text decoding (b), speech synthesis (c), and avatar direct decoding (d). Black lines denote the central sulcus (CS) and sylvian fissure (SF). e–g, Each plot shows each electrode’s contributions to two modalities as well as the Pearson correlation across electrodes and associated p-value.

Extended Data Fig. 8 Effect of anatomical regions on decoding performance.

a–c, Effect of excluding each region during training and testing on text-decoding word error rate (a), speech-synthesis mel-cepstral distortion (b), and avatar-direct-decoding correlation (c; average DTW correlation of jaw, lip, and mouth-width landmarks between the avatar and healthy speakers), computed using neural data as the participant attempted to silently say sentences from the 1024-word-General set. Significance markers indicate comparisons against the None condition, which uses all electrodes. *P < 0.01, **P < 0.005, ***P < 0.001, ****P < 0.0001, two-sided Wilcoxon signed-rank test with 15-way Holm-Bonferroni correction (full comparisons are given in Table S5). Distributions are over 25 pseudo-blocks for text decoding, 20 pseudo-blocks for speech synthesis, and 152 pseudo-blocks (19 pseudo-blocks each for 8 healthy speakers) for avatar direct decoding.

Supplementary information

Supplementary Information

This file contains: a list of investigators; Supplementary Notes 1 and 2 providing additional information about the participant in this study, including her residual articulatory capacity and current assistive communication methods; Methods providing additional methods to support the main results in the manuscript, including text corpora, text decoding, NATO code word and hand movement decoding, synthesis and avatar methods; Figs. 1–20 illustrating further analyses to support the main results in the manuscript (results shown include further details regarding avatar decoding, articulatory analyses, region-specific analyses, synthesis evaluation results and further analysis on phone decoding); Tables 1–14 providing support for the main results in the manuscript, including examples of speech synthesis, comparisons of different methods for synthesis and avatar analyses, results of a user-experience survey and hyperparameter descriptions for models used in the manuscript; references to support the Supplementary Methods; and Clinical Protocol and CONSORT checklist.

Supplementary Video 1

A demonstration of real-time multimodal decoding from brain activity with simultaneous text decoding, speech synthesis and avatar animation. In this task, the participant attempts to silently say sentences from the 50-phrase-AAC sentence set. Once the sentence in white text turns green, the participant attempts to silently say it. Meanwhile, neural data are streamed to a text-decoding model to decode a sequence of phonemes, which is then converted into a sentence, and a speech-synthesis model to decode a sequence of sound features, which is then vocoded into a personalized audible speech waveform and used to simultaneously animate a personalized virtual avatar face. The outputs from all three modalities are simultaneously presented back to the participant (the decoded text is blue).

Supplementary Video 2

A demonstration of generalizable, real-time text decoding from brain activity. In this task, the participant attempts to silently say sentences from the 1024-word-General sentence set. Once the sentence in white text turns green, the participant attempts to silently say it. Meanwhile, neural data are streamed to a phonetic text-decoding model. Three dots appear in the bottom half of the screen once speech is detected by the model (when a non-silence phone is predicted). The decoded text is displayed in the bottom half of the screen (replacing the three dots). These sentences were not attempted by the participant during model training.

Supplementary Video 3

A demonstration of freeform, real-time text decoding from brain activity. Instead of aligning the neural data sent to the text decoder (the same model as was used in Supplementary Video 2) in real time on the basis of the go cue, we instead used detected speech-onset times from a speech-detection model. The participant spontaneously decided what sentence to try to say in each trial. Decoded text replaced the three dots on the lower half of the screen. To confirm the accuracy of each decoded sentence, an experimenter sat in front of the participant (off-screen) and the participant made eye contact with the experimenter when a sentence was decoded correctly.

Supplementary Video 4

A demonstration of real-time classification of NATO code words and attempted finger flexions from brain activity. In the task, the participant attempts to silently say NATO code words and carry out hand-motor movements as prompted by text targets. Each target appears in the top half of the screen and is followed by a countdown. Once the target turns green, the participant attempts to silently say the code word or carry out the hand-motor movement. A classifier processes neural activity associated with the attempt to predict probabilities across the 30 possible targets. Afterwards, the probabilities for the top three classes are shown as a bar chart on the bottom of the screen.

Supplementary Video 5

A demonstration of real-time speech synthesis and avatar animation from brain activity. In the task, the participant attempts to silently say sentences shown on the screen from the 529-phrase-AAC sentence set. Each target sentence is displayed as white text on the screen. Once the sentence turns green, the participant attempts to silently say the sentence. Meanwhile, neural data are streamed to a speech-synthesis model. This model decodes a sequence of sound features, which is then used to generate an audible speech waveform and simultaneously animate a personalized virtual avatar face.

Supplementary Video 6

A demonstration of generalizable, real-time speech synthesis and avatar animation from brain activity. In the task, the participant attempts to silently say sentences shown on the screen from the 1024-word-General sentence set. Each target sentence is displayed as white text on the screen. Once the sentence turns green, the participant attempts to silently say the sentence. Meanwhile, neural data are streamed to a speech-synthesis model. This model decodes a sequence of sound features, which is then used to generate an audible speech waveform and simultaneously animate a personalized virtual avatar face.

Supplementary Video 7

An offline demonstration of personalized speech synthesis and avatar animation from brain activity. In this simulation, we processed speech waveforms decoded from the participant’s brain activity as she attempted to silently say sentences from the 529-phrase-AAC and 1024-word-General sentence sets using a voice-conversion algorithm. This algorithm was conditioned on audio from a short video clip of her speaking in a pre-injury video. The algorithm transformed the decoded waveforms to be in her personalized voice, which we then used to drive the avatar animation. We synchronized the avatar animation and the personalized decoded voice in this video.

Supplementary Video 8

A demonstration of real-time classification of attempted articulatory movements from brain activity to drive a facial avatar. In the task, the participant attempts to make six non-speech orofacial movements as prompted by text targets. Each target is displayed as white text on the screen. Once the target turns green, the participant attempts to make the prompted articulatory movement. A classifier processes neural activity associated with the attempt to predict which of the six possible articulatory movements the participant had attempted. Afterwards, this prediction is used to animate the avatar from a set of predefined animations associated with each movement.

Supplementary Video 9

A demonstration of real-time classification of attempted emotional expressions from brain activity to drive a facial avatar. In the task, the participant attempts to make three non-speech expressions as prompted by text targets. Each target is displayed as white text on the screen. Once the target turns green, the participant attempts to make the prompted expression. A classifier processes neural activity associated with the attempt to predict which of the three possible expressions the participant had attempted. Afterwards, this prediction is used to animate the avatar from a set of predefined animations associated with each expression.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Metzger, S.L., Littlejohn, K.T., Silva, A.B. et al. A high-performance neuroprosthesis for speech decoding and avatar control. Nature (2023). https://ift.tt/K8D4Y6B

-

Received:

-

Accepted:

-

Published:

-

DOI: https://ift.tt/K8D4Y6B

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

"control" - Google News

August 23, 2023 at 10:24PM

https://ift.tt/gIzLiwe

A high-performance neuroprosthesis for speech decoding and avatar control - Nature.com

"control" - Google News

https://ift.tt/zj9wnbW

https://ift.tt/vTHOX8s

Bagikan Berita Ini

0 Response to "A high-performance neuroprosthesis for speech decoding and avatar control - Nature.com"

Post a Comment