Since the earliest days of warfare, commanders of forces in the field have sought greater awareness and control of what is now commonly referred to as the "battlespace"—a fancy word for all of the elements and conditions that shape and contribute to a conflict with an adversary, and all of the types of military power that can be brought to bear to achieve their objectives.

The clearer a picture military decision-makers have of the entire battlspace, the more well-informed their tactical and strategic decisions should be. Bringing computers into the mix in the 20th century meant a whole new set of challenges and opportunities, too. The ability of computers to sort through enormous piles of data to identify trends that aren't obvious to people (something often referred to as "big data") didn't just open up new ways for commanders to get a view of the "big picture"—it let commanders see that picture closer and closer to real-time, too.

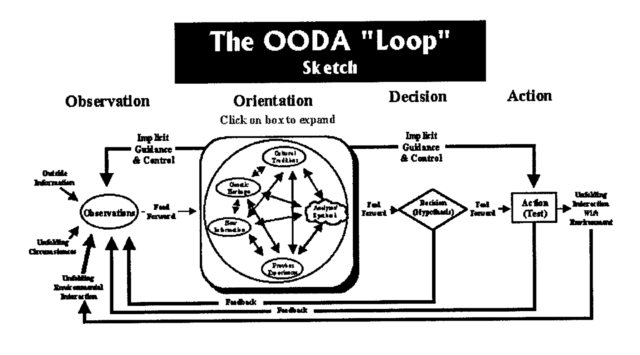

And time, as it turns out, is key. The problem that digital battlespace integration is intended to solve is reducing the time it takes commanders to close the "OODA loop," a concept developed by US Air Force strategist Colonel John Boyd. OODA stands for "observe, orient, decide, act"—the decision loop made repeatedly in responding to unfolding events in a tactical environment (or just about anywhere else). OODA is largely an Air Force thing, but all the different branches of the military have similar concepts; the Army has long referred to the similar Lawson Command and Control Loop in its own literature.

By being able to maintain awareness of the unfolding situation, and respond to changes and challenges more quickly than an adversary can—by "getting inside" the opponent's decision cycle—military commanders can in theory gain an advantage on them and shape events in their favor.

Whether it's in the cockpit or at the command level, speeding up the sensing of a threat and the response to it (did Han really shoot first, or did he just close the OODA loop faster?) is seen by military strategists as the key to dominance of every domain of warfare. However, closing that loop above the tactical level has historically been a challenge, because the communications between the front lines and top-level commanders have rarely been effective at giving everyone a true picture of what's going on. And for much of the past century, the US military's "battlespace management" was designed for dealing with a particular type of Cold War adversary—and not the kind they ended up fighting for much of the last 30 years, either.

Now that the long tail of the Global War on Terror is tapering down to a thin tip, the Department of Defense faces the need to re-examine the lessons learned over the past three decades (and especially the last two). The risks of learning the wrong things are huge. Trillions of dollars have been spent for not much effect over the last few decades. The Army's enormous (and largely failed) Future Combat Systems program and certain other big-ticket technology plays that tried to bake a digitized battlefield into a bigger package have, if anything, demonstrated why pulling off big visions of a totally digitally integrated battlefield carry major risks.

At the same time, other elements of the command, control, communication, computing, intelligence, surveillance and reconnaissance (or just "C4ISR" if you're into the whole brevity thing) toolkit have been able to build on basic building blocks and be (relatively) successful. The difference has often been in the doctrine that guides how technology is applied, and in how grounded the vision behind that doctrine is in reality.

Linking up

In the beginning, there was tactical command and control. The basic technical components of the early "integrated battlespace"—the automation of situational awareness through technologies such as radar with integrated "Identification, Friend or Foe" (IFF)—emerged during World II. But the modern concept of the integrated battlespace has its most obvious roots in the command and control (C2) systems of the early Cold War.

More specifically, they can be traced to one man: Ralph Benjamin, an electronic engineer for the Royal Naval Scientific Service. Benjamin, a Jewish refugee, went to work in 1944 for the Royal Naval Scientific Service in what was called the Admiralty Signals Establishment.

"They were going to call it the Admiralty Radar & Signals Establishment," Benjamin recounted in an oral history for the IEEE, "and got as far as printing the first letterheads with ARSE, before deciding it might be more tactful to make it the Admiralty Signals & Radar Establishment (ASRE)." During the war, he worked on a team developing radar for submarines, and also on the Mark V IFF system.

As the war came to an end, he had begun working on how to improve the flow of C2 information across naval battle groups. It was in that endeavor that Benjamin developed and later patented the display cursor and trackball, the forerunner of the computer mouse as part of his work on the first electronic C2 system, called the Comprehensive Display System. CDS allowed data shared from all of a battle group's sensors to be overlaid on a single display.

The basic design and architecture of Benjamin's CDS was the foundation for nearly all of US and NATO digital C2 systems developed over the next 30 years. It led to the US Air Force's Semi-Automatic Ground Environment (SAGE)—the system used to direct and control North American Air Defense (NORAD)—as well as the Navy Tactical Data System (NTDS), which reached the US fleet in the early 1960s. The same technology would be applied to handling antisubmarine warfare (much to the dismay of some Russian submarine commanders) with the ASWC&CS, deployed to Navy ships in the late 1960s and 1970s.

The core of Benjamin's C2 system was a digital data link protocol today known as Link-11 (or MIL-STD-6011). Link-11 is a radio network protocol based on high frequency (HF) or ultra-high frequency (UHF) radio that can transfer data at rates of 1,364 or 2,250 bits per second. Link-11 remains a standard across NATO today, because of its ability to network units not in line of sight, and is used in some form across all the branches of the US military—along with a point-to-point version (Link-11B) and a handful of other tactical digital information link (TADIL) protocols. But all the way up through the 1990s, various attempts to create better, faster, and more applicable versions of Link-11 failed.

Alphabet soup: from C2 to C3I to C4ISR

Beyond air and naval operations control, C2 was mostly about human-to-human communications. The first efforts to computerize C2 on a broader level came from the top down, following the Cuban Missile Crisis.

In an effort to speed National Command Authority communications to units in the field in time of crisis, the Defense Department commissioned the Worldwide Military Command and Control System (WWMCCS, or "wimeks"). WWMCCS was intended to give the President, the Secretary of Defense, and Joint Chiefs of Staff a way to rapidly receive threat warnings and intelligence information, and to then quickly assign and direct actions through the operational command structure.

Initially, WWMCCS was assembled from a collection of federated systems built at different command levels—nearly 160 different computer systems, based on 30 different software systems, spread across 81 sites. And that loose assemblage of systems resulted in early failures. During the Six-Day War between Egypt and Israel in 1967, orders were sent by the Joint Chiefs of Staff to move the USS Liberty away from the Israeli coastline, and despite five high-priority messages to the ship sent through WWMCCS, none were received for over 13 hours. By then, the ship had already been attacked by the Israelis.

There would be other failures that would demonstrate the problems with the disjointed structure of C2 systems, even as improvements were made to WWMCCS and other similar tools throughout the 1970s. The evacuation of Saigon at the end of the Vietnam War, the Mayaguez Incident, and the debacle at Desert One during the attempted hostage rescue in Iran were the most visceral of these, as commanders failed to grasp conditions on the ground while disaster unfolded.

These cases, in addition to the failed readiness exercises Nifty Nugget and Proud Spirit in 1978 and 1979, were cited by John Boyd in a 1987 presentation entitled "Organic Design for Command and Control," as was the DOD's response to them:

…[M]ore and better sensors, more communications, more and better computers, more and better display devices, more satellites, more and better fusion centers, etc—all tied to one giant fully informed, fully capable C&C system. This way of thinking emphasizes hardware as the solution.

Boyd's view was that this centralized, top-down approach would never be effective, because it failed to create the conditions key to success—conditions he saw as arising from things purely human, based on true understanding, collaboration, and leadership. "[C2] represents a top-down mentality applied in a rigid or mechanical (or electrical) way that ignores as well as stifles the implicit nature of human beings to deal with uncertainty, change, and stress," Boyd noted.

Those were the elements missing from late Cold War efforts, and what had been called "C2" gained some more Cs and evolved into "C4I"—command, control, communications, computer, and intelligence—systems. Eventually, surveillance and reconnaissance would be tagged onto the initialism, turning it into "C4ISR."

While there were notable improvements in some areas, such as sensors—as demonstrated by the Navy's Aegis system and the Patriot missile system—there was still an unevenness of information sharing. And the Army's C4I lacked any real digital command, control, and communications systems well into the 1990s. Most of the tasks involved were manual and required voice communications or even couriers to verify.

The Gulf War may not have been a true test of battlefield command and control, but it did hint at some of the elements that would both enhance and complicate the battlefield picture of the future. For example, it featured the first use of drones to perform battlefield targeting and intelligence collection—as well as the first surrender of enemy troops to a drone, when Iraqi troops on Faylaka Island signaled their surrender to the USS Wisconsin's Pioneer RPV. The idea of having remotely controlled platforms that could provide actionable information networked into the battlefield information space—something I had seen the early hints of in the late 1980s.

Networked war

And as the Internet economy exploded in the mid 1990s, military thinkers saw the expanding power of networked computing and began to think of how to apply Metcalfe's Law to warfare.

Military leaders began envisioning an Internet of deadly things. Chief of Naval Operations Admiral Jay Johnson was one of the first to use the term "network-centric warfare," predicting in 1997 that it would spur "speed of command" and "put decision-makers in parallel with shooters in ways that we were unable to do before, and transform warfare from a step function to a continuous process."Johnson and other senior officers saw network-connected sensors, weapons, and command as a way to create "information superiority, combined with netted, dispersed, offensive firepower." Vice Admiral Arthur Cebrowski, then President of the Naval War College, wrote in a 1998 paper that network-centric warfare would "prove to be the most important RMA [revolution in military affairs] in the past 200 years."

The problem was that the network side of things wasn't really ready. As Johnson was extolling the potentials of networked warfare, the next advancement in tactical data links was just getting off the blackboard after nearly three decades of work. That advancement was a high-capacity tactical data link technology called Link 16, a line-of-sight data link protocol based on time-division multiple access (TDMA) radio networking—the same sort of technology used by cellular phone networks.

Link 16 was developed as part of the Joint Tactical Information Distribution System (JTIDS), born out of efforts at MITRE and the Air Force Electronics System Division dating back to 1967 that also played a role in the development of Airborne Warning and Control System aircraft (AWACS). JTIDS carries up to 115 kilobits per second with error correction (although the waveform can handle data speeds of up to 1 megabit per second). Link 16 traffic can also be encapsulated in TCP/IP traffic, and there are now efforts to create satellite-based Link 16 connections.

Link 16 and JTIDS became part of the Army Tactical Command and Control System, which began development in 1987. But this first introduction of what amounted to PC-powered C4I to the battlefield had a long, laborious birth—it was intended to be fully deployed by 1997, but did not arrive widely before the beginning of the wars in Iraq and Afghanistan. And along the way, JTIDS got pulled into the Joint Tactical Radio System (JTRS) program and renamed the Mutifunctional Information Distribution System (MIDS). JTRS, as we have previously documented, was something of a train wreck.

Since Link 16 was line-of-sight, it didn't hold up well on the ground in the wars in Iraq and Afghanistan. And that created a bit of a deconfliction problem. In 2003, US Air Force aircraft were involved in multiple cases of fatal friendly fire, attacking US and allied forces in cases of mistaken identity. "Blue Force Tracker" systems—that is, systems designed to help friendly units keep track of other friendly units on the battlefield so that they don't accidentally fire on them—had to be adapted to use satellite communications, and they were not widely deployed enough to allow tracking of smaller units.

Meanwhile, the Army was barreling forward with plans for a new network-centric warfare model called Future Combat Systems (FCS)—an ambitious force modernization program that included new network protocols (all jammed into the JTRS program) under the overarching "LandWarNet," as well as highly networked individual soldier equipment and unmanned weapons systems. Weapons like the Non-Line-of-Site Launch System (NLOS-LS) were supposed to provide "distributed fires," using network-controlled launcher boxes seeded around the battlefield to launch GPS-guided missiles against targets on command.

The Navy also partially bought into FCS, tying components of it to the new Littoral Combat Ship program—planning to use a version of the NLOS-LS as well as the Fire Scout unmanned helicopter developed for the Army program. Only the Fire Scout ever made it to the LCS, however, as the NLOS-LS and many other parts of FCS were cancelled. (Ironically, the latest version of the Fire Scout finally incorporated Link-16.)

Perhaps the most visible component of the network-centric warfare strategy still in the game is the F-35 Joint Strike Fighter. The F-35's capabilities as a flying sensor and network node are part of what has kept the aircraft alive throughout its long and tortured trillion-dollar development cycle.

Lessons learned

Some things have worked over the past two decades, for some definitions of "work." The extension of networks to unmanned sensors on the ground, in the air, and on and under the seas has given commanders a much more immediate and flexible way of observing the environment—and, as demonstrated hundreds of times by Predator and Reaper drones, they have also given them more options on how to close the OODA loop quickly.

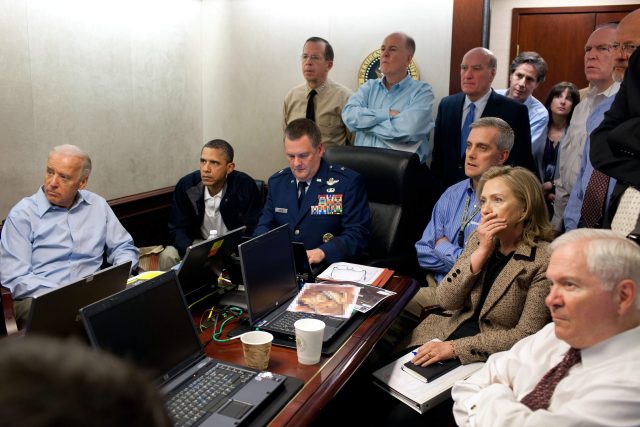

Ad hoc use of technology by troops in the field has also shown the potential of a digitally integrated battlefield. And as demonstrated by the situation room photos on the night of the raid on Osama bin Laden's compound, special forces use of digital integration can provide national leaders with a much more empathetic connection with those acting on their behalf.

The real question is whether all of this can successfully scale, and whether the military can adjust how it builds and buys things to deal with both the human scale of warfare and the rapid advance of technology. A two-decade rollout of a major system is the opposite of closing the OODA loop quickly.

Stay tuned—we'll be publishing the second part of this two-part report on the history and future of the connected battlespace in a couple of weeks!

"control" - Google News

January 25, 2021 at 09:00PM

https://ift.tt/3iIslcd

The history of the connected battlespace, part one: command, control, and conquer - Ars Technica

"control" - Google News

https://ift.tt/3bY2j0m

https://ift.tt/2KQD83I

Bagikan Berita Ini

0 Response to "The history of the connected battlespace, part one: command, control, and conquer - Ars Technica"

Post a Comment